Syntax is Dead! Long Live Syntax!

Grammar as an emergent shadow of generative language

There are patterns in language, recurring ways that words and phrases tend to appear together, with certain positions and relationships showing up again and again. Every language has them. We call them grammar, or more precisely, syntax. But no one knows exactly how, or why, these patterns got there.

People have long observed these patterns but they have interpreted them differently across eras. In parts of the ancient world, it was a prescriptive art, a tool of rhetoric and persuasion. Medieval scholars saw it as mirroring divine logic or cosmic order. Enlightenment thinkers cast it as a rational ideal, a reflection of reason itself. Across these traditions, grammar was more than etiquette: it was treated as something fundamentally real, an expression of deep principles underlying thought and language alike.

The behaviorist turn in the early 20th century rejected this view. Language was treated as a set of learned associations between stimuli and responses. Instead of viewing grammar as reflecting some hidden generative mechanism, behaviorists focused on describing observable patterns and leaving the rest to a generalized learning process. Grammar, in this model, was simply a label for consistent contingencies in the data.

Then came the Chomskyan revolution. In his 1959 critique of Skinner’s Verbal Behavior, Noam Chomsky argued that behaviorism couldn’t account for the generative nature of language, its ability to produce and understand an infinite variety of novel sentences not derivable from experience alone. To explain this, he introduced the notion of an innate syntactic system. Syntax, on this view, wasn’t just a pattern found in language; it was the computational engine that made language possible. Grammar was internal, rule-based, recursive, instantiated in the brain as a kind of computational inheritance.

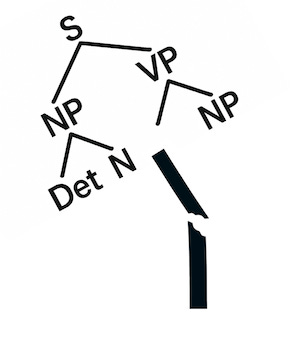

This view dominated linguistics for decades. Syntax was treated as a real mental mechanism: a set of symbolic rules governing phrase structure, hierarchies, and transformations. The goal of linguistic theory was to uncover this hidden code, to reveal the deep, universal structure that generated surface forms. Grammar was no longer just a description of patterns. It was the generator of language itself.

Then along came large language models (LLMs) and everything changed once again. These systems generate fluent, coherent, context-sensitive language across every domain without any built-in syntax, symbolic rules, or explicit grammatical knowledge. The basic computational task these systems are trained on is remarkably simple: predict the next word, based purely on the statistical structure of previous words, then tack that ouput on as part of the next input and feed it in again. This one simple trick—called autoregression—applied to massive amounts of data to train layers of learnable parameters, is enough to ‘solve’ human-level, fully grammatical, language. And it’s not just their outputs: when we probe their internal representations using techniques like structural analysis, we even discover that LLMs spontaneously develop representations that encode syntactic relationships with stunning precision, implicitly tracking parse-tree distances, hierarchical dependencies, and grammatical roles. In other words, these models learn to organize words in ways that precisely mirror linguistic syntax without ever being explicitly taught what syntax is.

This discovery fundamentally inverts our understanding of the relationship between syntax and language generation. The structure is real—more real, perhaps, than Chomsky imagined. But the causal story is completely backwards. In the Chomskyan framework, syntax is the engine: generative rules transform abstract structures into concrete sentences. Grammar is causally prior, the mechanism that makes language possible. But LLMs demonstrate that syntax emerges as a consequence rather than a cause. These models learn to predict the next word through statistical pattern recognition, and syntactic structure crystallizes as a byproduct of that optimization process. The hierarchical relationships, the parse trees, the grammatical dependencies—they're all there in the learned weights, but they're downstream effects of predictive success, not upstream generative rules.

This reframing dissolves the old dichotomy. Grammar is not a generative system inside the mind. It is a pattern in the data that molds the generative mind. But this just raises a deeper question: if minds learn grammar by detecting regularities already present in linguistic data, what are those regularities doing there in the first place? Why does language data contain such systematic patterns to begin with?

While I don’t claim to have the solution to this new riddle of syntax, I believe the answer likely lies in understanding grammar as an emergent, optimized solution to a multi-objective problem. Grammar is not a monolithic entity, but a dynamic statistical structure that arises from the convergence of several powerful pressures.

First, consider language as a behavioral coordination tool. The world is full of "things" and "doings," and to coordinate our actions, we need a reliable way to map these entities and their relationships onto a public signal. Syntax—through word order, grammatical case, and other structures—provides a predictable "behavioral contract" that allows us to distinguish who is doing what to whom. Systems that adopted these regularities were more successful at coordinating behavior and were thus more likely to be replicated.

Second, consider the generative process itself. A language system must be efficient. Whether it's a brain or an AI, the process of generating the next word or phrase must be computationally tractable. This pressure favors constrained generation, where the system's output is guided by statistical regularities that narrow the vast space of possibilities. The constraints aren't arbitrary; they are the grammar, the very things that make language both predictable and useful.

This dynamic of optimization is mirrored in the statistical properties of language, such as Zipf's Law. This law, which shows that a few words are used very frequently while most are rare, is not a random accident. It represents a brilliant compromise between a speaker's desire for ease (using a small, familiar vocabulary) and a listener's need for clarity (using a rich vocabulary to avoid ambiguity). In our autogenerative framework, Zipf's Law and syntax are two sides of the same coin: one is the statistical footprint, and the other is the structural scaffolding of a system optimized for both predictability and flexibility. The high-frequency words, which are highly predictable, serve as the structural glue of grammar, while the vast number of low-frequency words provide the expressive power.

This perspective suggests that humans are special not because we possess unique syntactic faculties, but because we have exceptionally sophisticated pattern-learning mechanisms. When such systems are applied to the problem of communication and behavioral coordination, syntactic structure emerges as a natural solution to the fundamental challenges of predictability, and flexibility.

So the script has been flipped. Syntax is real, structured, and remarkably consistent but it is consequence, not a cause. It is the inevitable shadow cast when minds learn to predict the patterns of human communication.

In a curious way, we have come full circle to the Enlightenment ideal of language as a key to the structure of mind but with the causality reversed. Instead of grammar revealing pre-existing rational principles, the patterns we call grammar reveal the fundamental nature of learning itself. The core engine of autoregression—predicting the next element in a sequence based on statistical patterns—may apply far more broadly than language alone. Perhaps this is how minds organize experience across domains: not through innate modules or explicit rules, but through the emergent structures that crystallize when prediction-based learning encounters the regularities of the world. Grammar becomes a window not into Universal Grammar, but into the universal principles of how intelligence bootstraps itself from pattern and prediction.

> Perhaps this is how minds organize experience across domains: not through innate modules or explicit rules, but through the emergent structures that crystallize when prediction-based learning encounters the regularities of the world.

It'll be very interesting to compare ideas of language modules and universal grammar to the idea of emotion modules and universal emotions. The theory of constructed emotion has presented a lot of evidence against the latter, and a powerful theoretical alternative. Lots of connections to explore!

Describe the process through which words group together and you've got the TOE.

Isn't it the same process that generates gravity? People may scoff because everyone knows that E=Mc2 is the process that gravity follows, right? But how is it that the same process can generate almost perfectly realistic movement in videos? It's not like E=Mc2 was put into the code of the algorithms producing these amazingly realistic videos. NONE of the movement in these videos are following any "laws of physics". The laws themselves emerged out of the process you are presenting.

This is the end of physics.